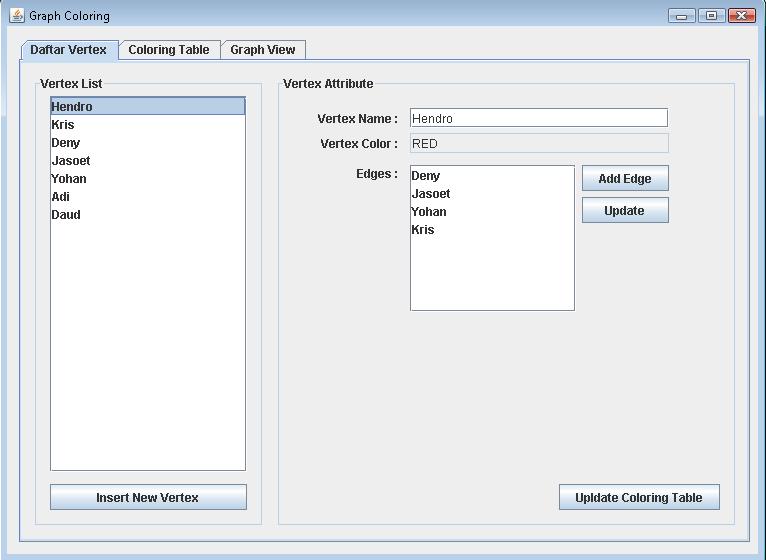

Program Penjadwalan Dengan Java

Program dibuat menggunakan database HSQLDB dan Java Swing. Program dapat. Pembayaran suport dengan semua program. Untuk penjadwalan. Composer.json ganti java ke php, 3 years ago. Upload csv file untuk jadwal kuliah; tambah data kursus untuk penjadwalan kursus. Kursus, ruang kursus dan sesi kursus, untuk kolom kelas dan modul silahkan input dengan value NULL.

Learn how to use Apache Maven to create a Java-based MapReduce application, then run it with Apache Hadoop on Azure HDInsight.

Note

This example was most recently tested on HDInsight 3.6.

Prerequisites

Java JDK 8 or later (or an equivalent, such as OpenJDK).

Note

HDInsight versions 3.4 and earlier use Java 7. HDInsight 3.5 and greater uses Java 8.

Configure development environment

The following environment variables may be set when you install Java and the JDK. However, you should check that they exist and that they contain the correct values for your system.

JAVA_HOME- should point to the directory where the Java runtime environment (JRE) is installed. For example, on an OS X, Unix or Linux system, it should have a value similar to/usr/lib/jvm/java-7-oracle. In Windows, it would have a value similar toc:Program Files (x86)Javajre1.7PATH- should contain the following paths:JAVA_HOME(or the equivalent path)JAVA_HOMEbin(or the equivalent path)The directory where Maven is installed

Create a Maven project

From a terminal session, or command line in your development environment, change directories to the location you want to store this project.

Use the

mvncommand, which is installed with Maven, to generate the scaffolding for the project.Note

If you are using PowerShell, you must enclose the

-Dparameters in double quotes.mvn archetype:generate '-DgroupId=org.apache.hadoop.examples' '-DartifactId=wordcountjava' '-DarchetypeArtifactId=maven-archetype-quickstart' '-DinteractiveMode=false'This command creates a directory with the name specified by the

artifactIDparameter (wordcountjava in this example.) This directory contains the following items:pom.xml- The Project Object Model (POM) that contains information and configuration details used to build the project.src- The directory that contains the application.

Delete the

src/test/java/org/apache/hadoop/examples/apptest.javafile. It is not used in this example.

Add dependencies

Edit the

pom.xmlfile and add the following text inside the<dependencies>section:This defines required libraries (listed within <artifactId>) with a specific version (listed within <version>). At compile time, these dependencies are downloaded from the default Maven repository. You can use the Maven repository search to view more.

The

<scope>provided</scope>tells Maven that these dependencies should not be packaged with the application, as they are provided by the HDInsight cluster at run-time.Important

The version used should match the version of Hadoop present on your cluster. For more information on versions, see the HDInsight component versioning document.

Add the following to the

pom.xmlfile. This text must be inside the<project>...</project>tags in the file; for example, between</dependencies>and</project>.The first plugin configures the Maven Shade Plugin, which is used to build an uberjar (sometimes called a fatjar), which contains dependencies required by the application. It also prevents duplication of licenses within the jar package, which can cause problems on some systems.

The second plugin configures the target Java version.

Note

HDInsight 3.4 and earlier use Java 7. HDInsight 3.5 and greater uses Java 8.

Save the

pom.xmlfile.

Create the MapReduce application

Go to the

wordcountjava/src/main/java/org/apache/hadoop/examplesdirectory and rename theApp.javafile toWordCount.java.Open the

WordCount.javafile in a text editor and replace the contents with the following text:Notice the package name is

org.apache.hadoop.examplesand the class name isWordCount. You use these names when you submit the MapReduce job.Save the file.

Build the application

Change to the

wordcountjavadirectory, if you are not already there.Use the following command to build a JAR file containing the application:

This command cleans any previous build artifacts, downloads any dependencies that have not already been installed, and then builds and package the application.

Once the command finishes, the

wordcountjava/targetdirectory contains a file namedwordcountjava-1.0-SNAPSHOT.jar.Note

The

wordcountjava-1.0-SNAPSHOT.jarfile is an uberjar, which contains not only the WordCount job, but also dependencies that the job requires at runtime.

Upload the jar

Use the following command to upload the jar file to the HDInsight headnode:

Replace USERNAME with your SSH user name for the cluster. Replace CLUSTERNAME with the HDInsight cluster name.

Belajar Pemrograman Dengan Java

This command copies the files from the local system to the head node. For more information, see Use SSH with HDInsight.

Run the MapReduce job on Hadoop

Connect to HDInsight using SSH. For more information, see Use SSH with HDInsight.

From the SSH session, use the following command to run the MapReduce application:

This command starts the WordCount MapReduce application. The input file is

/example/data/gutenberg/davinci.txt, and the output directory is/example/data/wordcountout. Both the input file and output are stored to the default storage for the cluster.Once the job completes, use the following command to view the results:

You should receive a list of words and counts, with values similar to the following text:

Next steps

In this document, you have learned how to develop a Java MapReduce job. See the following documents for other ways to work with HDInsight.

Menghitung Bangun Ruang Dengan Java

For more information, see also the Java Developer Center.